Activity tracker progress

2025-06-15 00:23

My goal at the moment is to get a database up to date with all my Garmin data dating back to 2018. Since my watch only stores the latest n .fit files, I had to request a data export from Garmin Connect. This came zipped up with my .fit files in three different folders, named differently to the files I download from my watch. Since the export, I have logged newer files on my watch and I download them each time I plug into my laptop, via a garmin_syc.py script that is running continuously as a systemd service.

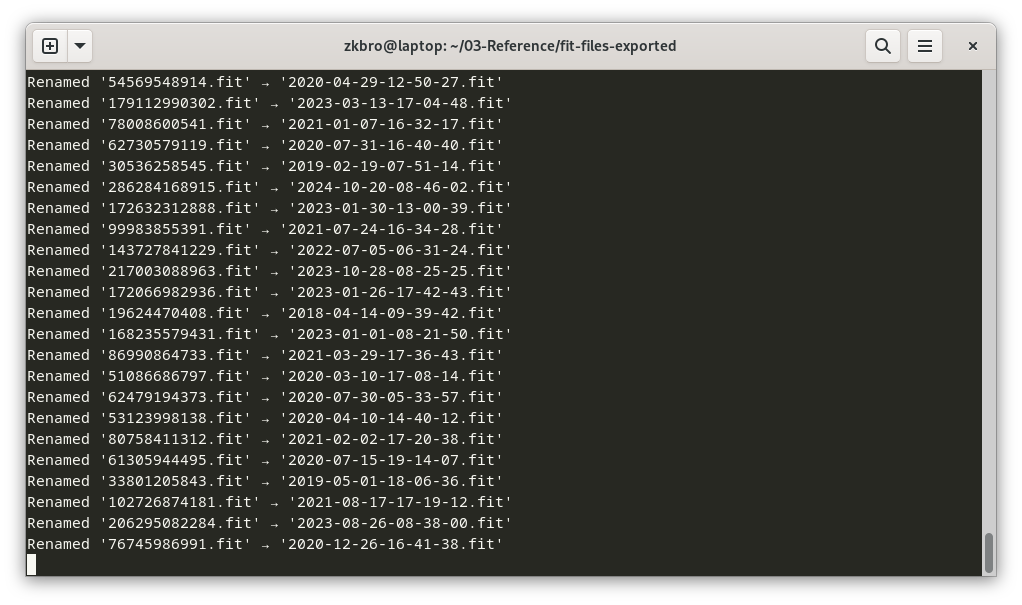

First step was getting all my files in the one place. First I wanted them all in the same filename convention. The .fit files I'm downloading from my watch are copied over in the %Y-%m-%d-%H-%M-%S.fit format. The Garmin Connect data came over as "my@email.com_activityID.fit". I've decided to roll witht he %Y-%m-%d-%H-%M-%S format. I created a rename_files.py script which reads each .fit file in the folder, pulls out the 'start_time' record, and renames the file accordingly.

I noted when I first looked in my exported files there were 28,859 of them. That indicates 28,859 activities over 7 years, or roughly 11.3 activites per day. I'm pretty active, but not that active. Once I ran my renaming script it picked up on 2,119 start_time records. Roughly 0.83 activities per day. Seems a bit more accurate, though still a little overkill. I'll explore the data a bit later (including the ones that didn't make the cut).

After eyeballing the renamed files against my current watch-exported-files it looked like it renamed them correctly (there was an overlap from 2024-08 to present). I copied over everything to the one folder (minus the overlap).

Now for some fun stuff (sshhh I'm having a ball already 🤓). Let's look at the data. For the database I have decided to try out DuckDB - because why not? New project, new tools. I am also going to try out the Harlequin SQL IDE, which has a DuckDB adapter. This thing is rad. It's a database client that runs in the terminal (well at least a terminal emulator... I haven't tried the actual terminal). Anyway, I haven't done too much with SQL before, and only used pandas dataframes a few times. I'm most familiar with geodatabases in Esri's ArcGIS. Though, from what I'm reading, DuckDB can be a powerful GIS tool too.

I'm getting sidetracked. First I need to create the database. Here again I created a custom python script, called create_database.py. I'm genius with these script names aren't I? It creates an activites.duckdb file out of all the .fit files. This took a few minutes, and got the fan on my laptop spinning pretty hard. Some would say a "high intensity" workout. har har.

Now to view the data in Harlequin, I type:

harlequin --adapter duckdb activities.duckdb

And with a little SQL...

Beautiful. At a glance it looks like all my activities are coming through.

Next steps will be to:

- update the

garmin_sync.pyscript to append new acitivites to the database - look at generating GPX files from the fit files, and include them in the duckDB as a related (spatial) table

- figure out where I want to create and store public and private notes and photos for activities

- the big one - syncing to my website.

Overall, enjoying this fun little project, exploring new tools, and where it's all heading.